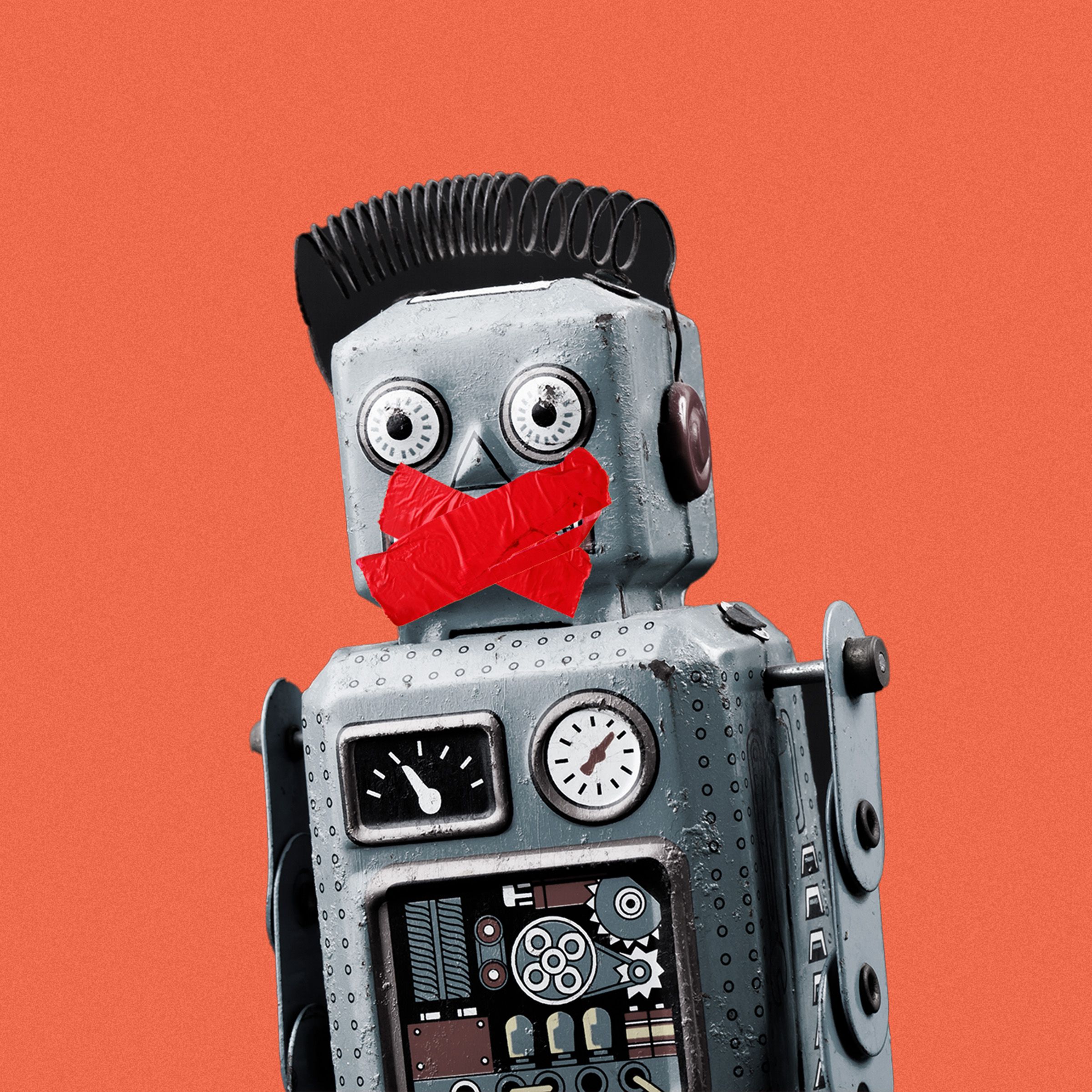

Anthropic Will Use Claude Chats for Training Data. Here’s How to Opt Out

Anthropic Will Use Claude Chats for Training Data. Here’s How to Opt Out

Anthropic, a leading AI company, has announced that they will be using data from Claude Chats, a popular…

Anthropic Will Use Claude Chats for Training Data. Here’s How to Opt Out

Anthropic, a leading AI company, has announced that they will be using data from Claude Chats, a popular messaging platform, for training their AI models.

This decision has raised concerns among users about privacy and data security. If you are a Claude Chats user and would like to opt out of having your data used for training purposes, there are a few steps you can take.

First, log in to your Claude Chats account and navigate to the settings section. Look for the option to manage your data preferences and select the option to opt out of data sharing for training purposes.

Alternatively, you can reach out to Anthropic directly and request to have your data excluded from their training data sets. They should have a process in place for handling these requests.

It is important to note that opting out of data sharing may limit the functionality of the AI models trained by Anthropic. However, your privacy and data security are paramount, and you have the right to control how your data is used.

Anthropic has stated that they take user privacy seriously and are committed to upholding the highest standards of data protection. They have also assured users that all data used for training is anonymized and aggregated to protect individual identities.

If you have any further questions or concerns about how your data is being used by Anthropic, do not hesitate to reach out to their customer support team for more information.

In conclusion, if you are a Claude Chats user and would like to opt out of having your data used for training AI models, there are steps you can take to protect your privacy and data security.